In this first part, my goal is to piece together the bits and pieces of documentation I found for the cluster setup and networking.

A must-read is the official documentation. I thought it was great, but be prepared for a lot reading. It’s not a one-command install by any means. I’m sure there are a lot of sources on how to automate this turn up – which is what all the big cloud providers do for you – but I wasn’t interested in that, so that won’t be covered in these Kubernetes posts.

I ran into two confusing topics on my first install of Kubernetes: the Container Runtime Environment (CRE) and the network plugin install. I will mostly cover the network plugin install below in case it helps others.

First I started out using my automated VM builds (you can find that post here) to build four Ubuntu 22.04 VMs: one controller and three worker nodes.

As you’ll see if you dive into the prerequisites for kubeadm, you have to install a container runtime. I will blame the first failure I had when I tried containerd on just not knowing what I was doing, but on my second attempt I tried Docker Engine, and this did work with success. With the instructions from Kubernetes, I was able to follow without issue.

Once the Kubernetes instructions were followed for the container runtime, the Kubernetes packages could be installed on the control node:

sudo apt-get install -y kubelet kubeadm kubectlNow it’s time to bootstrap the cluster. If you’re using this post as a step-by-step guide, I would suggest coming back once the install and cluster is up to read up more on what kube-init does as it is intriguing.

kubeadm init --pod-network-cidr 10.200.0.0/16 --cri-socket unix:///var/run/cri-dockerd.sock

--service-cidr 192.168.100.0/22

Dissecting what is above:

- –pod-network-cidr – this is an unused CIDR range that I have available. By default this is not exposed outside the worker nodes, but it can be. Kubernetes will assume a /24 per worker node out of this space. It is something I would like to investigating changing – but I ran into complications and instead of debugging, I just accepted using a bigger space for growth.

- –cri-socket – this is to instruct the setup process to use docker engine. My understanding is that Kubernetes now defaults to containerd, and if you use that CRI, this is not needed.

- –service-cidr – I, again, decided to pick a dedicated range as the network plugin I used can announce these via BGP, and I wanted a range that was free on my network. I cover the networking piece more below.

At the end of this init process, it gave me a kubeadm join command, which is a token and other info to be able to join from the worker nodes to the controller.

kubeadm join 192.168.66.165:6443 --token <token> --discovery-token-ca-cert-hash <cert>--cri-socket unix:///var/run/cri-dockerdAt this point, running kubectl get pods showed the worker nodes, but none were ready until there was a network plugin running and configured.

Network Plugin

I tried several network plugin projects, and ended up landing on Kube-router. This really seemed to give my end goal of being able to advertise different services or pods via BGP into my network.

I used this example yaml file from the project page and only had to make slight modifications to define the router to peer to.

For the container “kube-router” in the spec.args section, I defined the peer router ips, and ASN information. For example:

containers:

- name: kube-router

image: docker.io/cloudnativelabs/kube-router

imagePullPolicy: Always

args:

- --run-router=true

- --run-firewall=true

- --run-service-proxy=true

- --bgp-graceful-restart=true

- --kubeconfig=/var/lib/kube-router/kubeconfig

- --advertise-cluster-ip=true

- --advertise-external-ip=true

- --cluster-asn=65170

- --peer-router-ips=192.168.66.129

- --peer-router-asns=64601I made sure to adjust these settings to fit my environment. You can decide if you want cluster IPs and external IPs advertised. I did enable those but with more understanding, I only envision needing external IPs for load balancer services for example to be advertised.

I ran the deployment with:

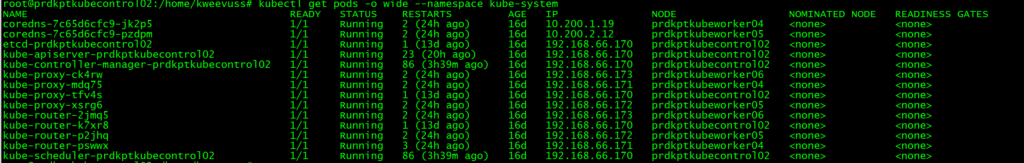

kubectl apply -f "path_to_yaml_file"After this, I saw several containers being made:

I saw the above containers will all be running, and when I looked at the route table I saw installed routes to the pod network cidrs to each host:

ip route

default via 192.168.66.129 dev eth0 proto static

10.200.1.0/24 via 192.168.66.171 dev eth0 proto 17

10.200.2.0/24 via 192.168.66.172 dev eth0 proto 17

10.200.3.0/24 via 192.168.66.173 dev eth0 proto 17

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

192.168.66.128/25 dev eth0 proto kernel scope link src 192.168.66.170Check out the kube-routers documentation for more info, but essentially the kube router containers formed BGP peers by listening to worker nodes join the pool, and creating routes so pods on different worker nodes can communicate.

I also noticed coredns containers should start, and “kubectl get nodes” showed all the nodes in a ready state:

kubectl get nodes

NAME STATUS ROLES AGE VERSION

prdkptkubecontrol02 Ready control-plane 16d v1.31.1

prdkptkubeworker04 Ready <none> 16d v1.31.1

prdkptkubeworker05 Ready <none> 16d v1.31.1

prdkptkubeworker06 Ready <none> 16d v1.31.1At this point I had a working Kubernetes cluster!